Thread: I/O - Increase RAM

shared_buffers = 51605MB (I'll change it to 32GB)

effective_cache_size = 96760MB

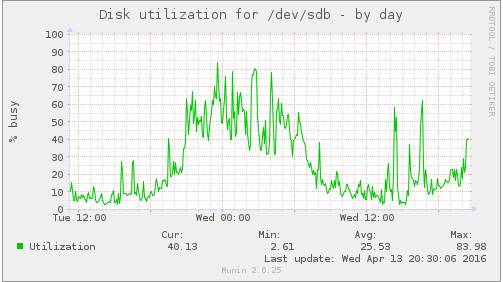

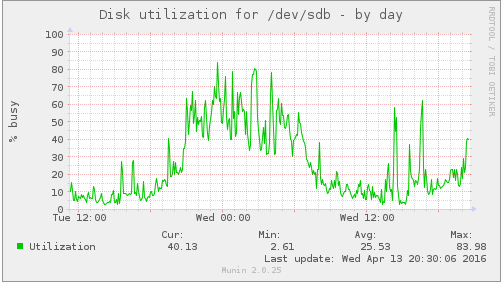

Attachment

On 04/13/2016 01:43 PM, drum.lucas@gmail.com wrote: > Question: > > I know that might not be the best option, but by increasing the RAM and > the CACHE would help, right? might, not necessarily would. JD -- Command Prompt, Inc. http://the.postgres.company/ +1-503-667-4564 PostgreSQL Centered full stack support, consulting and development. Everyone appreciates your honesty, until you are honest with them.

On 4/13/2016 1:43 PM, drum.lucas@gmail.com wrote: > At the moment I'm having 100% I/O during the day. My server has SATA > HDs, and it can't be changed now. > So, to solve the problem (or at least try) I was thinking about double > the RAM, and by doing that, increasing the cache. depends on if its read or write IO. many of our database servers are nearly 100% write IO, only thing that will speed that up is faster disks and/or more disks in raid10. -- john r pierce, recycling bits in santa cruz

On 4/13/2016 1:52 PM, John R Pierce wrote: > ... will speed that up is faster disks .... I left out faster RPM disks... faster sequential transfer speeds are generally of little impact to write-bound database servers as most of the writes are random, so its an issue of IOPS rather than MB/second. -- john r pierce, recycling bits in santa cruz

On 04/13/2016 01:43 PM, drum.lucas@gmail.com wrote:Question:

I know that might not be the best option, but by increasing the RAM and

the CACHE would help, right?

might, not necessarily would.

On 04/13/2016 01:59 PM, drum.lucas@gmail.com wrote: > > > On 14 April 2016 at 08:52, Joshua D. Drake <jd@commandprompt.com > <mailto:jd@commandprompt.com>> wrote: > > On 04/13/2016 01:43 PM, drum.lucas@gmail.com > <mailto:drum.lucas@gmail.com> wrote: > > Question: > > I know that might not be the best option, but by increasing the > RAM and > the CACHE would help, right? > > > might, not necessarily would. > > > Would be nice if you could explain why not / why yes For reads the more things in cache the more performance. For writes the topic is more complicated but the long and short is this: The moment you flush your cache (evict a bunch of buffers, linux dumping out its cache etc..) you are going to get hammered. JD -- Command Prompt, Inc. http://the.postgres.company/ +1-503-667-4564 PostgreSQL Centered full stack support, consulting and development. Everyone appreciates your honesty, until you are honest with them.

|From: John R Pierce Sent: Wednesday, April 13, 2016 1:53 PM | |On 4/13/2016 1:43 PM, drum.lucas@gmail.com wrote: |> At the moment I'm having 100% I/O during the day. My server has SATA |> HDs, and it can't be changed now. |> So, to solve the problem (or at least try) I was thinking about double |> the RAM, and by doing that, increasing the cache. | |depends on if its read or write IO. many of our database servers are |nearly 100% write IO, only thing that will speed that up is faster disks and/or |more disks in raid10. |-- |john r pierce, recycling bits in santa cruz Agree with John and also add that if your READ queries or data model are not optimized then you could throw a TB of ram atit and see no difference. You need to analyze your queries and find out WHY there's so much i/o. It sounds like thrashto me...so you need to do some homework and get the behaviors sorted out, then it should become obvious what needsfixing. Mike

It sounds as if you have *one* server with 128GB of memory.

Therefore the probabilities are that you have too many task running at the same time. Flushing the disk drives’ read/write cache.

Increasing the memory probably will not solve the problem. The most effective solution would be to separate the tasks that access the drive (read or write) often.

Alternatively if you have access to the code. Try to cache some of the disk activities.

The third solution would be to separate the tasks and move them to a different server.

Hope this helps.

From: pgsql-general-owner@postgresql.org [mailto:pgsql-general-owner@postgresql.org] On Behalf Of drum.lucas@gmail.com

Sent: 13 April 2016 21:44

To: Postgres General

Subject: [GENERAL] I/O - Increase RAM

Hi all,

At the moment I'm having 100% I/O during the day. My server has SATA HDs, and it can't be changed now.

So, to solve the problem (or at least try) I was thinking about double the RAM, and by doing that, increasing the cache.

The server has 128GB today:

shared_buffers = 51605MB (I'll change it to 32GB)

effective_cache_size = 96760MB

Question:

I know that might not be the best option, but by increasing the RAM and the CACHE would help, right?

Thanks

Lucas

Attachment

Sorry, by the first solution I meant to separate the drive for your db files from other tasks.

/////////////

It sounds as if you have *one* server with 128GB of memory.

Therefore the probabilities are that you have too many tasks running at the same time. Flushing the disk drives’ read/write cache.

Increasing the memory probably will not solve the problem. The most effective solution would be to separate the tasks that access the drive (read or write) often.

Alternatively if you have access to the code. Try to cache some of the disk activities.

The third solution would be to separate the tasks and move them to a different server.

Hope this helps.

From: pgsql-general-owner@postgresql.org [mailto:pgsql-general-owner@postgresql.org] On Behalf Of drum.lucas@gmail.com

Sent: 13 April 2016 21:44

To: Postgres General

Subject: [GENERAL] I/O - Increase RAM

Hi all,

At the moment I'm having 100% I/O during the day. My server has SATA HDs, and it can't be changed now.

So, to solve the problem (or at least try) I was thinking about double the RAM, and by doing that, increasing the cache.

The server has 128GB today:

shared_buffers = 51605MB (I'll change it to 32GB)

effective_cache_size = 96760MB

Question:

I know that might not be the best option, but by increasing the RAM and the CACHE would help, right?

Thanks

Lucas

Attachment

Hi all,At the moment I'm having 100% I/O during the day. My server has SATA HDs, and it can't be changed now.So, to solve the problem (or at least try) I was thinking about double the RAM, and by doing that, increasing the cache.The server has 128GB today:shared_buffers = 51605MB (I'll change it to 32GB)

effective_cache_size = 96760MBQuestion:I know that might not be the best option, but by increasing the RAM and the CACHE would help, right?

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

sdb 0.00 236.30 1769.10 5907.30 20366.80 69360.80 23.38 36.38 4.74 0.34 6.06 0.09 71.00

Attachment

We're gonna need better stats. iostat, iotop, vmstat etc will all break down your io between reads and writes, random vs sequential etc.

I'll try to get more data during a spike

SPIKE:

rrqm/s wrqm/s r/s w/s rsec/s wsec/s avgrq-sz avgqu-sz await svctm %util

dm-2 0.00 0.00 129.00 585.10 5932.00 4680.80 14.86 26.82 37.58 1.40 99.80

If you're at 100% IO Util, and iostat says you're writing is taking up 20 or 30% of the time, then no, adding cache probably won't help.

Start looking into adding SSDs. They are literally 20 to 1000 times faster at a lot of io stuff than spinning drives. And they're relatively cheap for what they do.

Note that a software RAID-5 array of SSDs can stomp a hardware controller running RAID-10 with spinning disks easily, and RAID-5 is pretty much as slow as RAID gets.Here's a few minutes of "iostat -xd 10 /dev/sdb" on one of my big servers at work. These machines have a RAID-5 of 10x750GB SSDs under LSI MegaRAIDs with caching turned off. (much faster that way). The array created thus is 6.5TB and it's 83% full. Note that archiving and pg_xlog are on separate volumes as well.

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

sdb 0.00 236.30 1769.10 5907.30 20366.80 69360.80 23.38 36.38 4.74 0.34 6.06 0.09 71.00

rrqm/s wrqm/s r/s w/s rsec/s wsec/s avgrq-sz avgqu-sz await svctm %util

dm-2 0.00 0.00 42.60 523.60 1644.80 4188.80 10.30 7.85 13.88 1.04 59.15

So we're seeing 1769 reads/s, 5907 writes/s and we're reading ~20MB/s and writing ~70MB/s. In the past this kind of performance from spinning disks required massive caching and cabinets full of hard drives. When first testing these boxes we got literally a fraction of this performance with 20 spinning disks in RAID-10, and they had 512GB of RAM. Management at first wanted to throw more memory at it, these machines go to 1TB RAM, but we tested with 1TB RAM and the difference was literally a few % points going from 512GB to 1TB RAM.If your iostat output looks anything like mine, with lots of wkB/s and w/s then adding memory isn't going to do much.

On 4/13/2016 9:12 PM, drum.lucas@gmail.com wrote: > I know.. but unfortunately the bosses don't want to spend money :( Time Actually Is Money. -- john r pierce, recycling bits in santa cruz