Thread:

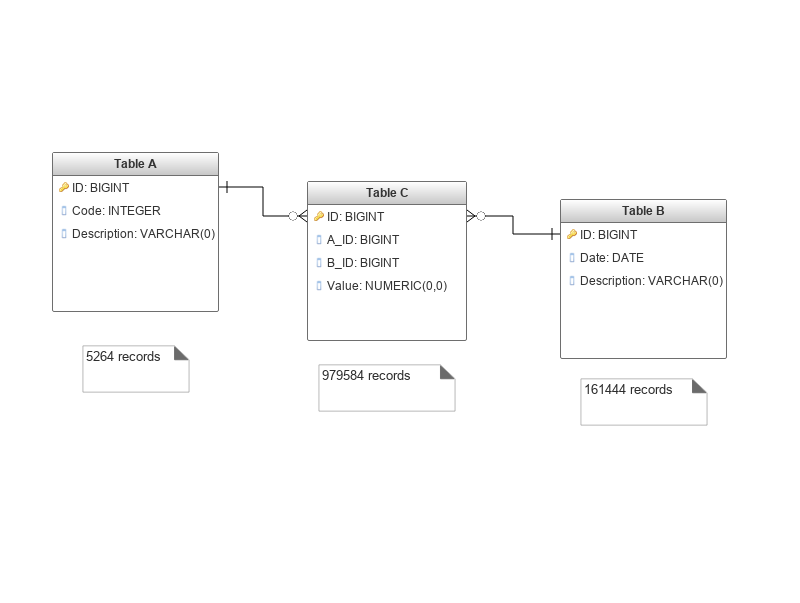

I have a report that takes about 20 minutes to generate. It is generated from 3 tables: according to image.

The report input parameter is a date range. So to generate it I select all records in Table A and run them

in loop-for. For each record in Table A I make a query Table B join with Table C where I filter the records through the date field and make the sum

of the value field.

Given this scenario, I would like your help in finding a solution that can reduce the generation time of this report. System developed in PHP / Laravel.

PostgreSQL

max_connections = 50

shared_buffers = 4GB

effective_cache_size = 12GB

maintenance_work_mem = 1GB

checkpoint_completion_target = 0.7

wal_buffers = 16MB

default_statistics_target = 100

random_page_cost = 4

effective_io_concurrency = 2

work_mem = 83886kB

min_wal_size = 1GB

max_wal_size = 2GB

Linux Server CentOS 7, Single Xeon 4-Core E3-1230 v5 3.4Ghz w / HT, 16GB RAM.

I've already created indexes in the fields that are involved in the queries.

Database schema

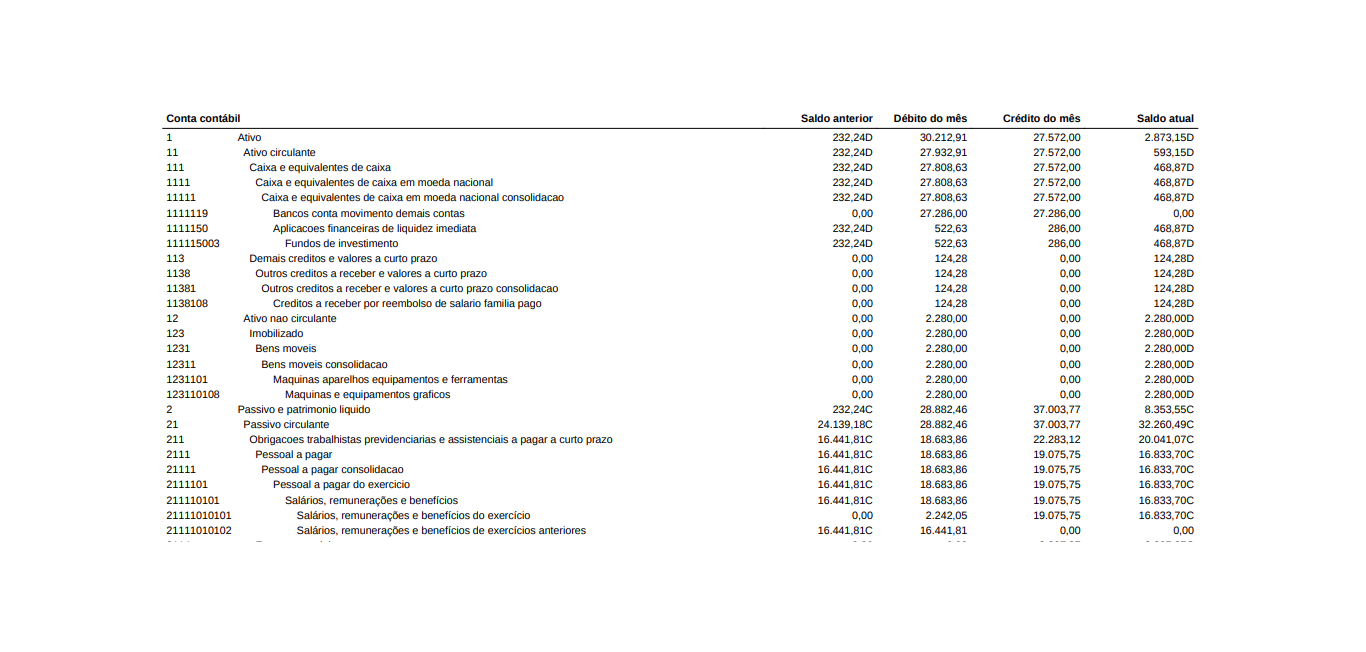

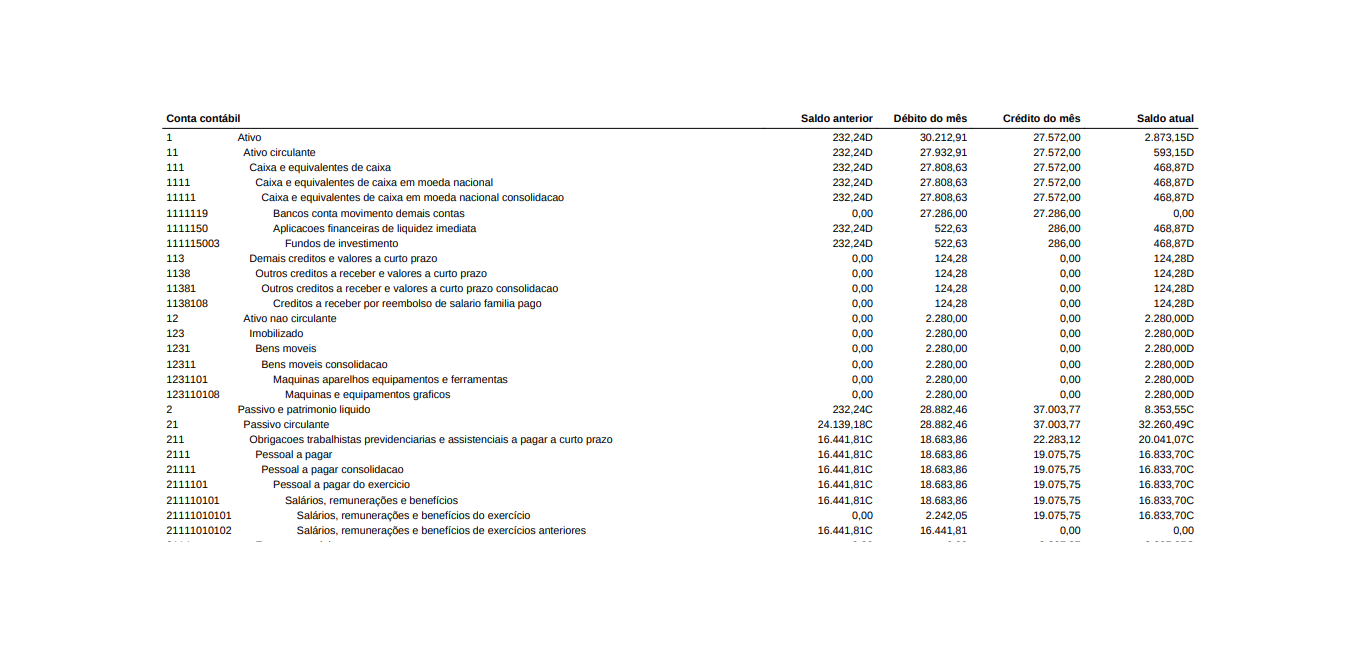

Report result

Report result

Atenciosamente,

Evandro Abreu.

Engenheiro de Sistemas at STS Informática Ltda.

Google Talk: evandro.abreu

Twitter: http://twitter.com/abreu_evandro

Skype: evandro_abreu

Phone: +55 86 98835-0468

Attachment

Hi, Please don't send images to the list, you can send a link to one of the image host websites if you need to describe something graphical. But here, you could just send the queries and \d for the tables. On Sat, Feb 09, 2019 at 01:45:50PM -0300, Evandro Abreu wrote: > I have a report that takes about 20 minutes to generate. It is generated > from 3 tables: according to image. > The report input parameter is a date range. So to generate it I select all > records in Table A and run them > in loop-for. For each record in Table A I make a query Table B join with > Table C where I filter the records through the date field and make the sum > of the value field. So you're running query 5000 times ? Do you really need a for loop ? Could you just join the 3 tables together and GROUP BY a.id ? Please send "explain analyze" for the queries, or a link to the output on depesz site. https://wiki.postgresql.org/wiki/Slow_Query_Questions#Information_You_Need_To_Include Also, are they all taking about the same amount of time ? Justin

Hi, On 2019-02-09 11:16:33 -0600, Justin Pryzby wrote: > Please don't send images to the list, you can send a link to one of the image > host websites if you need to describe something graphical. FWIW, I don't agree with that policy. I plenty of time process email while withoug internet, so I appreciate self contained email. Greetings, Andres Freund

Hi,

Do you have an index in the date field?

Obtener Outlook para Android

From: Andres Freund <andres@anarazel.de>

Sent: Saturday, February 9, 2019 5:23:14 PM

To: Justin Pryzby

Cc: Evandro Abreu; pgsql-performance@lists.postgresql.org

Subject: Re: slow to run query 5000 times

Sent: Saturday, February 9, 2019 5:23:14 PM

To: Justin Pryzby

Cc: Evandro Abreu; pgsql-performance@lists.postgresql.org

Subject: Re: slow to run query 5000 times

Hi,

On 2019-02-09 11:16:33 -0600, Justin Pryzby wrote:

> Please don't send images to the list, you can send a link to one of the image

> host websites if you need to describe something graphical.

FWIW, I don't agree with that policy. I plenty of time process email

while withoug internet, so I appreciate self contained email.

Greetings,

Andres Freund

On 2019-02-09 11:16:33 -0600, Justin Pryzby wrote:

> Please don't send images to the list, you can send a link to one of the image

> host websites if you need to describe something graphical.

FWIW, I don't agree with that policy. I plenty of time process email

while withoug internet, so I appreciate self contained email.

Greetings,

Andres Freund

Hi,

it will be good if you could post the queries you use + the explain output.

Thanks

Le sam. 9 févr. 2019 à 17:46, Evandro Abreu <evandro.abreu@gmail.com> a écrit :

I have a report that takes about 20 minutes to generate. It is generated from 3 tables: according to image.The report input parameter is a date range. So to generate it I select all records in Table A and run themin loop-for. For each record in Table A I make a query Table B join with Table C where I filter the records through the date field and make the sumof the value field.Given this scenario, I would like your help in finding a solution that can reduce the generation time of this report. System developed in PHP / Laravel.PostgreSQLmax_connections = 50shared_buffers = 4GBeffective_cache_size = 12GBmaintenance_work_mem = 1GBcheckpoint_completion_target = 0.7wal_buffers = 16MBdefault_statistics_target = 100random_page_cost = 4effective_io_concurrency = 2work_mem = 83886kBmin_wal_size = 1GBmax_wal_size = 2GBLinux Server CentOS 7, Single Xeon 4-Core E3-1230 v5 3.4Ghz w / HT, 16GB RAM.I've already created indexes in the fields that are involved in the queries.Database schemaReport result

--Atenciosamente,Evandro Abreu.Engenheiro de Sistemas at STS Informática Ltda.Google Talk: evandro.abreuTwitter: http://twitter.com/abreu_evandroSkype: evandro_abreuPhone: +55 86 98835-0468