Thread: BUG #17974: Walsenders memory usage suddenly spike to 80G+ causing OOM and server reboot

BUG #17974: Walsenders memory usage suddenly spike to 80G+ causing OOM and server reboot

From

PG Bug reporting form

Date:

The following bug has been logged on the website: Bug reference: 17974 Logged by: Michael Guissine Email address: mguissine@gmail.com PostgreSQL version: 14.8 Operating system: AWS RDS Description: We are running relatively large and busy Postgres database on RDS and using logical replication extensively. We currently have 7 walsenders and while we often see replication falls behind due to high transactional volume, we've never experienced memory issues in 14.6 and below. After recent upgrade to 14.8, we already had several incidents where walsender processes RES memory would suddenly increase to over 80GB each causing freeable memory on the instance to go down to zero. Interesting that even after Instance reboot, the memory used by walsender processes won't get released until we restart the replication and drop the logical slots. The logical_decoding_work_mem was set to 512MB in time of the last incident but we recently lowered it to 128MB. Any known issues in pg 14.8 that would trigger this behaviour?

Re: BUG #17974: Walsenders memory usage suddenly spike to 80G+ causing OOM and server reboot

From

Michael Paquier

Date:

On Wed, Jun 14, 2023 at 12:05:32AM +0000, PG Bug reporting form wrote: > We are running relatively large and busy Postgres database on RDS and using > logical replication extensively. We currently have 7 walsenders and while we > often see replication falls behind due to high transactional volume, we've > never experienced memory issues in 14.6 and below. After recent upgrade to > 14.8, we already had several incidents where walsender processes RES memory > would suddenly increase to over 80GB each causing freeable memory on the > instance to go down to zero. Interesting that even after Instance reboot, > the memory used by walsender processes won't get released until we restart > the replication and drop the logical slots. The logical_decoding_work_mem > was set to 512MB in time of the last incident but we recently lowered it to > 128MB. > > Any known issues in pg 14.8 that would trigger this behaviour? Yes, there are known issues with memory handling in logical replication setups. See for example this thread: https://www.postgresql.org/message-id/CAMnUB3oYugXCBLSkih+qNsWQPciEwos6g_AMbnz_peNoxfHwyw@mail.gmail.com This is not a simple problem, unfortunately :/ -- Michael

Attachment

Re: BUG #17974: Walsenders memory usage suddenly spike to 80G+ causing OOM and server reboot

From

Andres Freund

Date:

Hi, On 2023-06-14 10:23:32 +0900, Michael Paquier wrote: > On Wed, Jun 14, 2023 at 12:05:32AM +0000, PG Bug reporting form wrote: > > We are running relatively large and busy Postgres database on RDS and using > > logical replication extensively. We currently have 7 walsenders and while we > > often see replication falls behind due to high transactional volume, we've > > never experienced memory issues in 14.6 and below. After recent upgrade to > > 14.8, we already had several incidents where walsender processes RES memory > > would suddenly increase to over 80GB each causing freeable memory on the > > instance to go down to zero. When postgres knows it ran out of memory (instead of having gotten killed by the OOM killer), it'll dump memory context information to the log. Could you check whether there are related log entries? They should precede an "out of memory" ERROR. > > Interesting that even after Instance reboot, > > the memory used by walsender processes won't get released until we restart > > the replication and drop the logical slots. The logical_decoding_work_mem > > was set to 512MB in time of the last incident but we recently lowered it to > > 128MB. That seems very unlikely to be the case. If you restarted postgres or postgres and the OS, there's nothing to have allocated the memory. What exactly do you mean by "Instance reboot"? > > Any known issues in pg 14.8 that would trigger this behaviour? > > Yes, there are known issues with memory handling in logical > replication setups. See for example this thread: > https://www.postgresql.org/message-id/CAMnUB3oYugXCBLSkih+qNsWQPciEwos6g_AMbnz_peNoxfHwyw@mail.gmail.com Why would 14.8 have made that problem worse? Greetings, Andres Freund

Re: BUG #17974: Walsenders memory usage suddenly spike to 80G+ causing OOM and server reboot

From

Michael Guissine

Date:

Thanks Andres, see below

On Wed, Jun 14, 2023 at 6:15 PM Andres Freund <andres@anarazel.de> wrote:

Hi,

On 2023-06-14 10:23:32 +0900, Michael Paquier wrote:

> On Wed, Jun 14, 2023 at 12:05:32AM +0000, PG Bug reporting form wrote:

> > We are running relatively large and busy Postgres database on RDS and using

> > logical replication extensively. We currently have 7 walsenders and while we

> > often see replication falls behind due to high transactional volume, we've

> > never experienced memory issues in 14.6 and below. After recent upgrade to

> > 14.8, we already had several incidents where walsender processes RES memory

> > would suddenly increase to over 80GB each causing freeable memory on the

> > instance to go down to zero.

When postgres knows it ran out of memory (instead of having gotten killed by

the OOM killer), it'll dump memory context information to the log. Could you

check whether there are related log entries? They should precede an "out of

memory" ERROR.

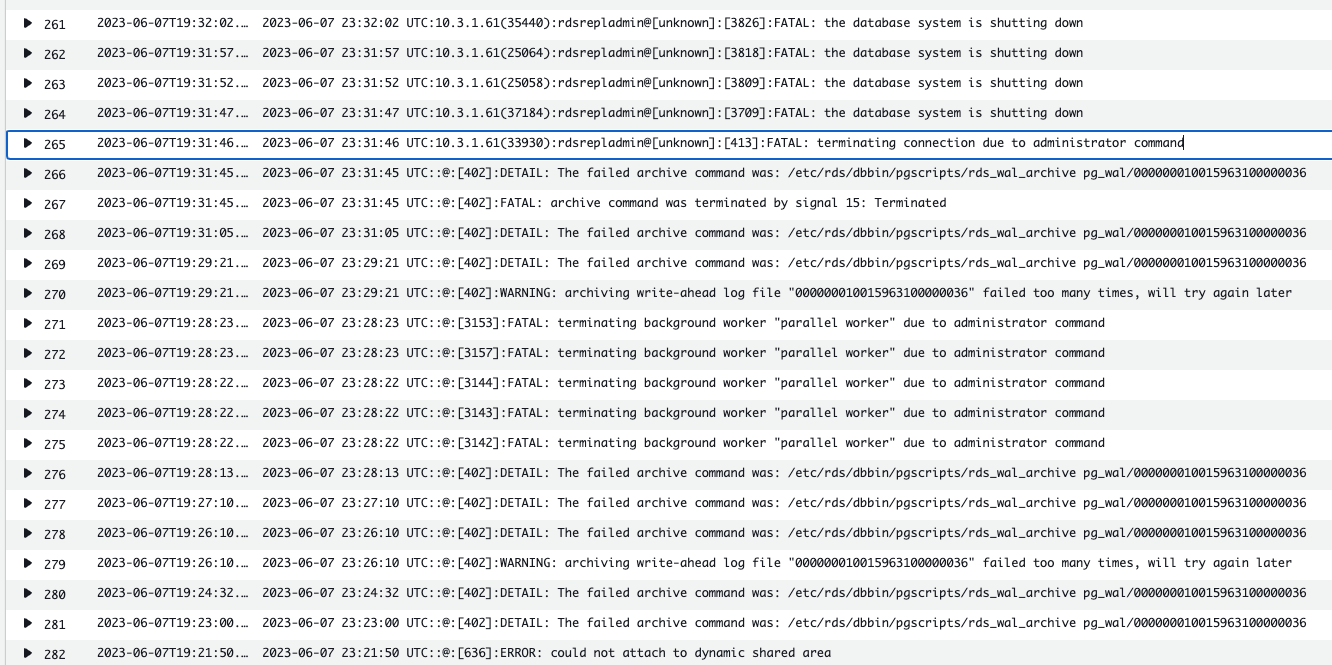

There are no "out of memory errors" in the logs, the RDS instance was restarted by AWS in response to the memory issues on the host (most likely). This is all I see in the RDS logs at the time of the failure

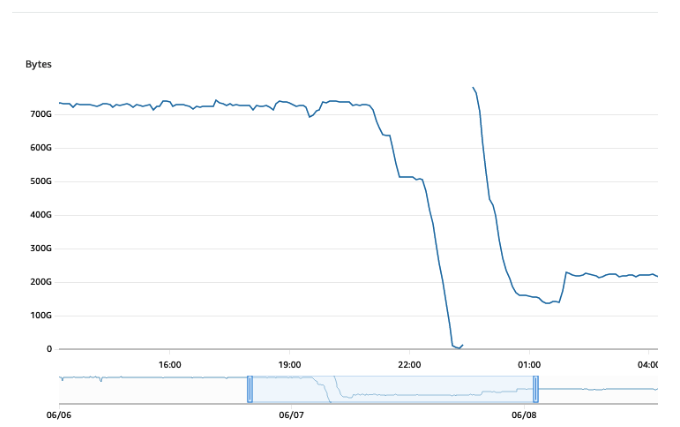

There was sudden freeable memory drop prior to the incident

note that after restart, the memory didn't fully recovered even though we had everything stopped except the logical replication

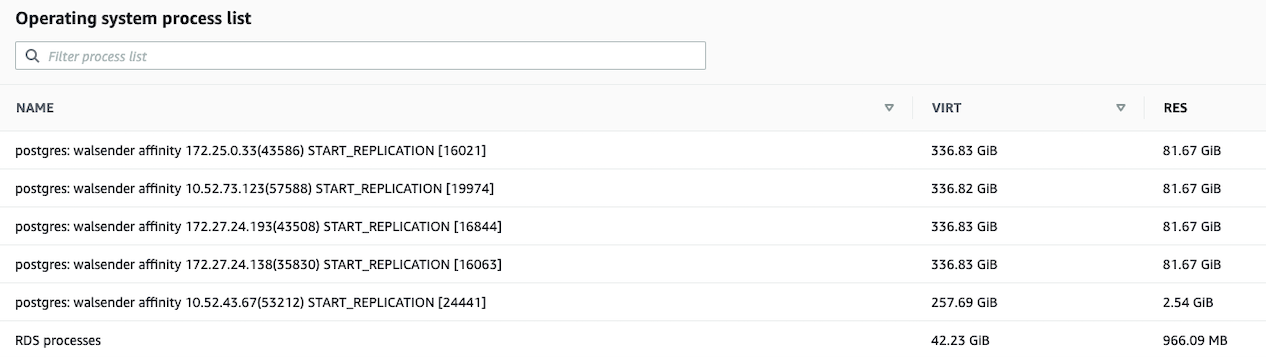

this is a view into processes list after the instance has recovered, showing that walsenders are still holding to 80GB+ of res memory

> > Interesting that even after Instance reboot,

> > the memory used by walsender processes won't get released until we restart

> > the replication and drop the logical slots. The logical_decoding_work_mem

> > was set to 512MB in time of the last incident but we recently lowered it to

> > 128MB.

That seems very unlikely to be the case. If you restarted postgres or postgres

and the OS, there's nothing to have allocated the memory. What exactly do you

mean by "Instance reboot"?

By instance reboot I meant AWS terminating postgres process and restarting

> > Any known issues in pg 14.8 that would trigger this behaviour?

>

> Yes, there are known issues with memory handling in logical

> replication setups. See for example this thread:

> https://www.postgresql.org/message-id/CAMnUB3oYugXCBLSkih+qNsWQPciEwos6g_AMbnz_peNoxfHwyw@mail.gmail.com

Why would 14.8 have made that problem worse?

This is an assumption obviously, based on the fact that we have been running this database for several years already and haven't seen similar memory issues until the recent upgrade to 14.8.

Greetings,

Andres Freund

Attachment

Re: BUG #17974: Walsenders memory usage suddenly spike to 80G+ causing OOM and server reboot

From

Michael Paquier

Date:

On Wed, Jun 14, 2023 at 08:04:53PM -0400, Michael Guissine wrote: > On Wed, Jun 14, 2023 at 6:15 PM Andres Freund <andres@anarazel.de> wrote: >>>> Any known issues in pg 14.8 that would trigger this behaviour? >>> >>> Yes, there are known issues with memory handling in logical >>> replication setups. See for example this thread: >>> >>> https://www.postgresql.org/message-id/CAMnUB3oYugXCBLSkih+qNsWQPciEwos6g_AMbnz_peNoxfHwyw@mail.gmail.com >> >> Why would 14.8 have made that problem worse? Hmm? I don't necessarily imply that 14.8 made it worse, but that there are known issues in this area as the other thread mention (which is mainly about v15, actually, where the situation could be a bit better). > This is an assumption obviously, based on the fact that we have been > running this database for several years already and haven't seen similar > memory issues until the recent upgrade to 14.8. Was that all the time with 14 as base? What are the minor versions you used in the past? Perhaps there is a correlation here that could help a bit. There have been a few things in this area lately, like 9f2cc1a, 4fbe609, 68dcce2, fd270b7 or 8b9cbd4 (this one made the decoding cheaper), so it could be possible that a defect has been introduced. Being able to know the contexts where the memory is piling up would help a lot, for sure. -- Michael

Attachment

Re: BUG #17974: Walsenders memory usage suddenly spike to 80G+ causing OOM and server reboot

From

Michael Guissine

Date:

Hi Michael, see below

On Wed., Jun. 14, 2023, 21:02 Michael Paquier, <michael@paquier.xyz> wrote:

On Wed, Jun 14, 2023 at 08:04:53PM -0400, Michael Guissine wrote:

> On Wed, Jun 14, 2023 at 6:15 PM Andres Freund <andres@anarazel.de> wrote:

>>>> Any known issues in pg 14.8 that would trigger this behaviour?

>>>

>>> Yes, there are known issues with memory handling in logical

>>> replication setups. See for example this thread:

>>>

>>> https://www.postgresql.org/message-id/CAMnUB3oYugXCBLSkih+qNsWQPciEwos6g_AMbnz_peNoxfHwyw@mail.gmail.com

>>

>> Why would 14.8 have made that problem worse?

Hmm? I don't necessarily imply that 14.8 made it worse, but that

there are known issues in this area as the other thread mention (which

is mainly about v15, actually, where the situation could be a bit

better).

> This is an assumption obviously, based on the fact that we have been

> running this database for several years already and haven't seen similar

> memory issues until the recent upgrade to 14.8.

Was that all the time with 14 as base? What are the minor versions

you used in the past? Perhaps there is a correlation here that could

help a bit. There have been a few things in this area lately, like

9f2cc1a, 4fbe609, 68dcce2, fd270b7 or 8b9cbd4 (this one made the

decoding cheaper), so it could be possible that a defect has been

introduced. Being able to know the contexts where the memory is

piling up would help a lot, for sure.

We started with v 9 or 10 originally I think but the recent upgrade was from v14.6 to v14.8

--

Michael

Re: BUG #17974: Walsenders memory usage suddenly spike to 80G+ causing OOM and server reboot

From

Masahiko Sawada

Date:

On Thu, Jun 15, 2023 at 10:02 AM Michael Paquier <michael@paquier.xyz> wrote: > > On Wed, Jun 14, 2023 at 08:04:53PM -0400, Michael Guissine wrote: > > On Wed, Jun 14, 2023 at 6:15 PM Andres Freund <andres@anarazel.de> wrote: > >>>> Any known issues in pg 14.8 that would trigger this behaviour? > >>> > >>> Yes, there are known issues with memory handling in logical > >>> replication setups. See for example this thread: > >>> > >>> https://www.postgresql.org/message-id/CAMnUB3oYugXCBLSkih+qNsWQPciEwos6g_AMbnz_peNoxfHwyw@mail.gmail.com > >> > >> Why would 14.8 have made that problem worse? > > Hmm? I don't necessarily imply that 14.8 made it worse, but that > there are known issues in this area as the other thread mention (which > is mainly about v15, actually, where the situation could be a bit > better). Yeah, IIUC the logical decoding could use nearly unlimited memory depending on workloads (see analysis here[1]). Regards, [1] https://www.postgresql.org/message-id/OS3PR01MB6275CAC41E8564D60DC66D229E769%40OS3PR01MB6275.jpnprd01.prod.outlook.com -- Masahiko Sawada Amazon Web Services: https://aws.amazon.com

Re: BUG #17974: Walsenders memory usage suddenly spike to 80G+ causing OOM and server reboot

From

Michael Guissine

Date:

I should stand corrected @Andres Freund , the memory DID recover immediately after reboot, but quickly start dropping again shortly after. It fully recovered only after we dropped and recreated all replication slots.

On Wed, Jun 14, 2023 at 6:15 PM Andres Freund <andres@anarazel.de> wrote:

Hi,

On 2023-06-14 10:23:32 +0900, Michael Paquier wrote:

> On Wed, Jun 14, 2023 at 12:05:32AM +0000, PG Bug reporting form wrote:

> > We are running relatively large and busy Postgres database on RDS and using

> > logical replication extensively. We currently have 7 walsenders and while we

> > often see replication falls behind due to high transactional volume, we've

> > never experienced memory issues in 14.6 and below. After recent upgrade to

> > 14.8, we already had several incidents where walsender processes RES memory

> > would suddenly increase to over 80GB each causing freeable memory on the

> > instance to go down to zero.

When postgres knows it ran out of memory (instead of having gotten killed by

the OOM killer), it'll dump memory context information to the log. Could you

check whether there are related log entries? They should precede an "out of

memory" ERROR.

> > Interesting that even after Instance reboot,

> > the memory used by walsender processes won't get released until we restart

> > the replication and drop the logical slots. The logical_decoding_work_mem

> > was set to 512MB in time of the last incident but we recently lowered it to

> > 128MB.

That seems very unlikely to be the case. If you restarted postgres or postgres

and the OS, there's nothing to have allocated the memory. What exactly do you

mean by "Instance reboot"?

> > Any known issues in pg 14.8 that would trigger this behaviour?

>

> Yes, there are known issues with memory handling in logical

> replication setups. See for example this thread:

> https://www.postgresql.org/message-id/CAMnUB3oYugXCBLSkih+qNsWQPciEwos6g_AMbnz_peNoxfHwyw@mail.gmail.com

Why would 14.8 have made that problem worse?

Greetings,

Andres Freund